9 AB Testing Mistakes Made by Everyone

A/B testing is a powerful tool which is not used often enough, which is a shame, because nothing else enables you to assume that much control when designing an optimal user experience. The problem is that, even when A/B testing is carried out, it is usually not done properly, which leads to erroneous conclusions, and you end up losing time and money, since you have focused on something that wasn’t right in the first place.

In order to help you avoid the mistakes everyone makes, we have gotten in touch with David Myles, who is a manager for Essayontime. Get his take on A/B testing, which is something his company does a lot in order to provide the best user experience. He pointed out the following 9 mistakes, along with the solutions.

1. Quitting the Test Too Soon

Let’s say you are testing two different versions of your new website. During the first few days, the first one is way ahead of the second, which causes you to end the testing and declare the first version a clear winner. Wrong! Let’s take look at the example below and see why you are wrong:

The test was done by SumoMe, and it shows the test results for two different popups. The second one sports some great numbers, 82.97%, which would be fine in most cases, but if we take a look at the first one, it has 99.95% chance of being the winner! You aim should be about 95% confidence, because then there is only a 5% chance that it’s the wrong choice.

2. Not Having a Hypothesis

When you are testing without a hypothesis, you can end up with some pretty random results which are hard to interpret. In short, you will not learn anything new about your audience’s habits or behavior. You need something to base your tests on, whether it’s the traffic, bounce rate, or anything else. Things like analytics data, heat maps, as well as polls and surveys are a true goldmine of data which you can build your hypothesis on.

3. Discarding Failed Tests

A while back, there was a test we had conducted which included 6 rounds until we were able to pick the winner. Although it did 80% better than the other design, during the first round, it was leading by only 13%. But, after tweaking the variables, we were finally able to see the results that we had anticipated. You can also check a similar example done by CXL.

4. Tests with Overlapping Traffic

This might seem like a great way to save time and some cash, but the problem is that the results might be a tad misleading because of the overlapping traffic. It’s always better to run a separate test, but if you really must test this way, at least make sure there an even traffic distribution between the versions you are testing.

5. Not Running Tests Continuously

Tests are not something that needs to be done a couple of times every so often. You need to test everything all the time because that’s how you get more data that will provide with an edge over your competitors, and which will enable you to provide a better user experience. Also, new trends are always coming and going, and testing is one of the ways you can keep up.

6. Testing when There Is Not Enough Traffic or Conversions

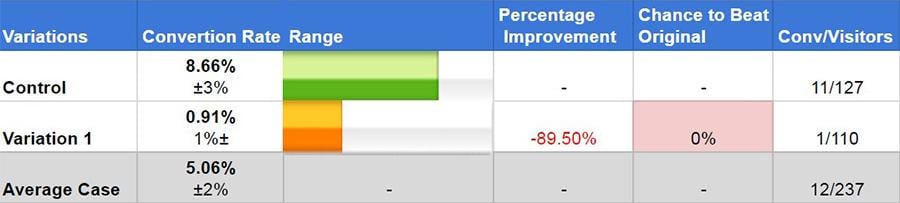

Before reading any further, take a look at the case study done by CXL. The control version was winning by a landslide, and the variation has a really small chance of coming out on top, as can be seen in the screenshot:

isits, which is not nearly enough. Once there was more traffic, the results looked like this:

This is why it’s so important to test using a sample which is statistically significant.

7. Changing Parameters during Testing

Your testing parameters, such as traffic distribution, goals, settings, and so on, should remain fixed during the testing process. In case you get a brand-new idea, save it for a separate test, because applying it to the test which is already taking place will produce inaccurate numbers.

8. Having Too Many Variations

Having as many variations as possible looks good on paper, but in reality, it slows down the testing, but it takes a long time to see any real results. Also, an example by Google illustrates this perfectly. They had tested 41 shades of blue to see which one is a favorite among users. But, having this many variations meant that 95% confidence was not enough because the chance of false positive was as much as 90%.

9. Not Considering Validity Threats

Once you have all the results in place, you can interpret them, but before you do that, you need to check all the parameters and all the metrics that are pertinent to your test. If any case any of them is not producing results or is slightly off, you need to see why it’s behaving that way.

Conclusion

A/B testing should be the cornerstone of your online business or any kind of online endeavor. It is complex and time-consuming, but it’s incredibly effective. On top that, you will finally be able to know what works and why, and save some money in the process.

Related Posts

Free Website Testing Tools to Detect Pages’ Weak Points [Earn Extra Bucks on Testing]

Adobe Shadow: Synchronized Mobile Website Testing

Basics of Website Usability Testing

P.S. Don't forget to check out our social media marketing templates.

Get more to your email

Subscribe to our newsletter and access exclusive content and offers available only to MonsterPost subscribers.

Leave a Reply

You must be logged in to post a comment.